Quantitative Results

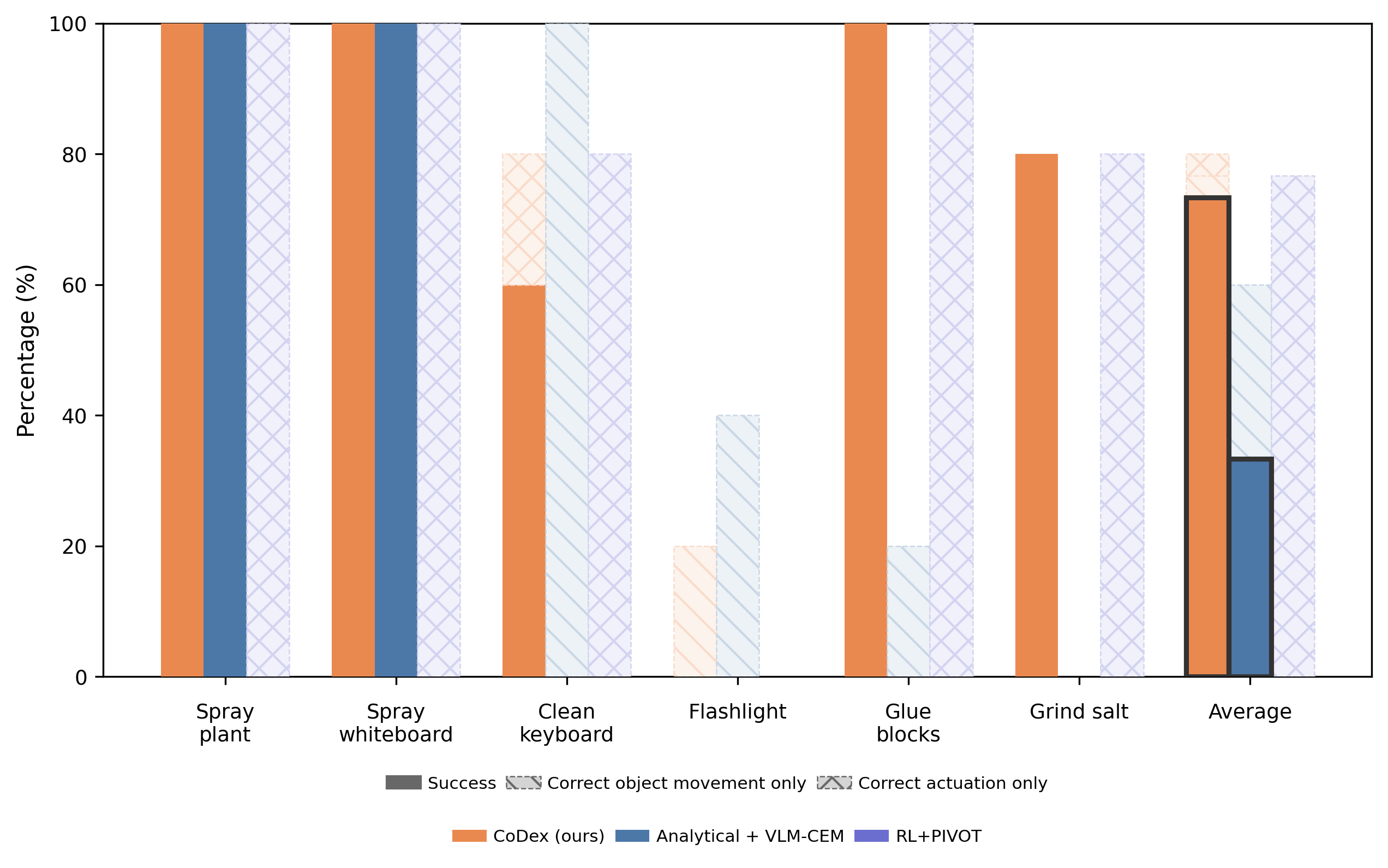

Real‑world performance across six CD‑FOM tasks (5 trials each). The bottom row averages correspond to the overall success for each method.

| Task | CoDex (Ours) | Analytical + VLM‑CEM | RL+PIVOT |

|---|---|---|---|

| Spray whiteboard | 5/5 (100%) | 5/5 (100%) | 0/5 (0%) |

| Spray plant | 5/5 (100%) | 5/5 (100%) | 0/5 (0%) |

| Clean keyboard | 3/5 (60%) | 0/5 (0%) | 0/5 (0%) |

| Illuminate toy | 0/5 (0%) | 0/5 (0%) | 0/5 (0%) |

| Glue blocks | 5/5 (100%) | 0/5 (0%) | 0/5 (0%) |

| Grind salt | 4/5 (80%) | 0/5 (0%) | 0/5 (0%) |

| Average (Overall) | 22/30 (73%) | 10/30 (33%) | 0/30 (0%) |

CoDex reliably solves the two spray tasks and hot‑glue, with partial degradation on Clean keyboard and a full failure on Illuminate toy. Baselines mirror the composition plot: Analytical+VLM‑CEM succeeds only on the two sprays, and RL+PIVOT achieves no holistic success despite frequent correct actuations.